There’s some kind of magic to a perfectly timed lip sync video. When the expressions, timing, and mouth movements all come together just so, it feels smooth — like the performer actually owns the words or lyrics. But when it does go awry, it’s impossible to ignore. A half-second delay, a stuck smile, or rigid pacing can shatter the illusion in an instant.

Because of this, marketers and producers are using technologies like Pippit. You may use its AI video generator to make scripts and avatars come to life with realistic synchronising that stays clear of those startling issues. You may produce professional, captivating videos that captivate viewers and entice them to return for more, rather than letting your failures bring you down.

When timing trips you up

The immersion killer number one? Off-beat timing. A slightest discrepancy between words and lip movements ruins the performance as robotic or artificial. Human beings are innately sensitive to rhythm and flow, so timing becomes evident.

- Mouth lags behind the sound to end, creating awkward delays.

- Syllables do not match beats in a song, making lip sync look forced.

- Long pauses make avatars appear frozen, dragging viewers out of the experience.

This is addressed by making the sync as natural as human dialogue, where short pauses, breathing, and changes in pace are as important as what is being said.

Expressions: the overlooked hero of believability

Technically flawless sync still doesn’t work without similar expressions. Picture a character exclaiming great news with a vacant expression — it shatters immersion immediately. Expressions have to reflect the audio’s tone, whether joy, anger, or curiosity.

Lip sync AI tool now record nuanced changes in brows, eyes, and smiles to make the delivery feel alive. Without it, you’re in danger of the uncanny valley: where people see something’s off but can’t quite put their finger on what.

Pacing issues and speech flow

Pacing isn’t simply a matter of timing out individual words — it’s the way the entire message is unfolding. If an avatar talks too fast, it comes across as frantic. Too slow, and the energy goes out. Poor pacing causes a dissonance between the energy of the voice and the character’s pace.

The secret is not only to change lip sync, but also gestures and pace of performance. A cheerful jingle should be delivered quickly and in a high tempo, whereas a serious message demands breathing room for feelings to take effect.

Worst mistakes that cause lip syncs to sink

Here’s the rundown of most frequent failures that ruin otherwise good content:

- Flat faces: non-synchronization of emotion with words.

- Robot rhythm: uniformly spaced words without a natural pause.

- Lagging sync: lips lagging behind audio by halves of seconds.

- Repetitive gestures: characters repeating the same gesture repeatedly.

- Ignoring context: happy delivery for sad lines or sad delivery for happy lines.

All of these are preventable with the right attention to detail — and the right tools.

Karaoke fun: using Pippit to hit every note

Lip syncing doesn’t have to be a pain. Pippit keeps it fun with avatars designed to maintain timing, expressions, and pacing tight. Its lip sync AI transcends basic mouth movements to make your videos believable to look at and feel.

Here’s how you can do it:

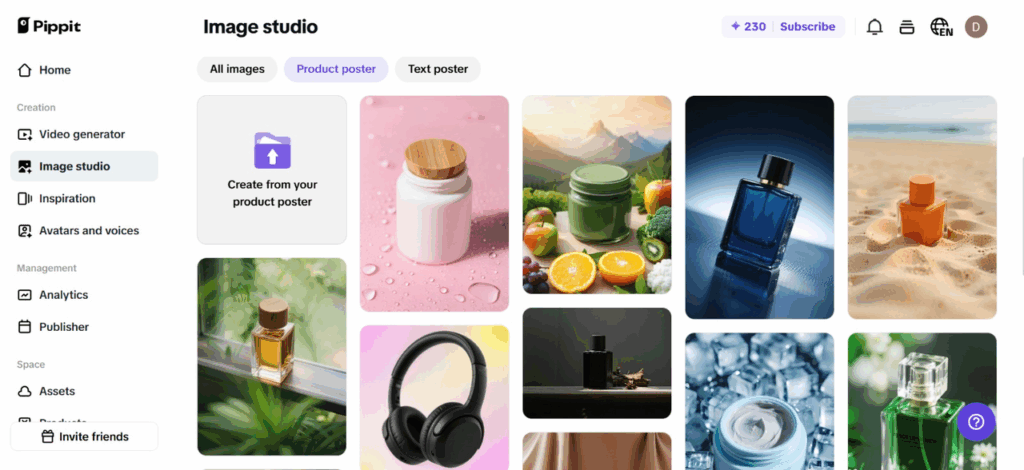

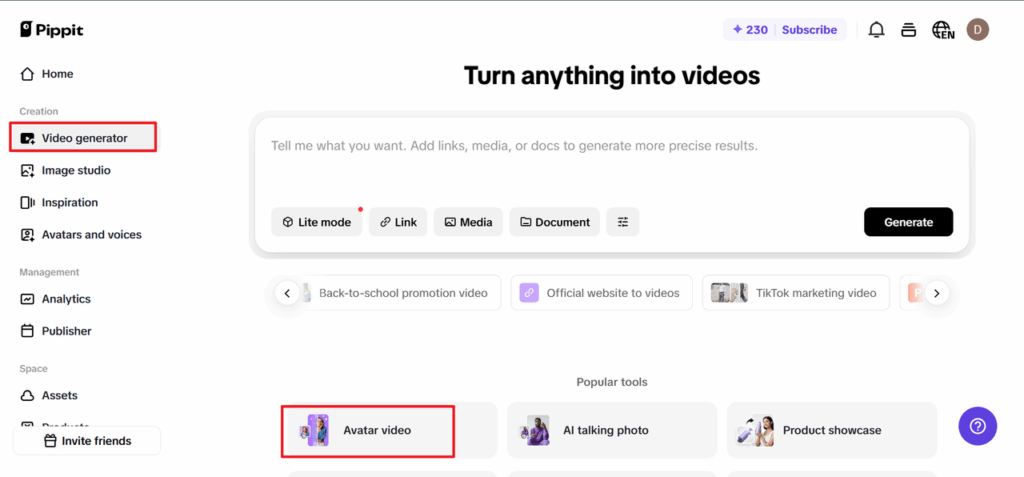

Step 1: Go to the video generator and choose avatars

Log in to Pippit and navigate to Video generator from the left-hand menu. In Popular tools, choose Avatars to select or create AI avatars for your videos. With this feature, you can sync voiceovers with avatars with ease for lively and interactive content.

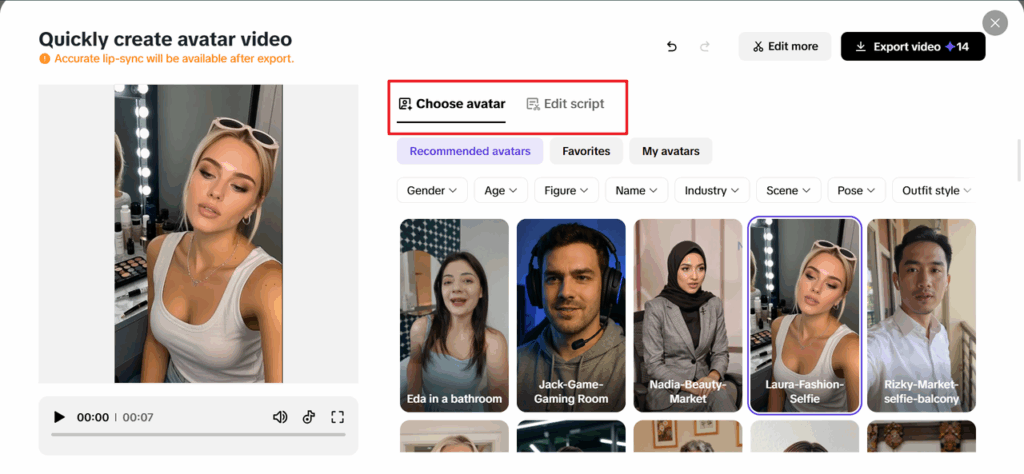

Step 2: Select your avatar and edit the script

Once you log in to access the avatar tools, choose the desired avatar from the Recommended avatars section. You can sort avatars by age, industry, gender, and more and discover the perfect one for your video. After selecting an avatar, click on Edit script to personalize the dialogue. You can add text in various languages, and the avatar will lip-sync accurately. To make your video even better, go down to Change caption style and choose from a variety of caption styles to suit your video’s theme and add to its interest.

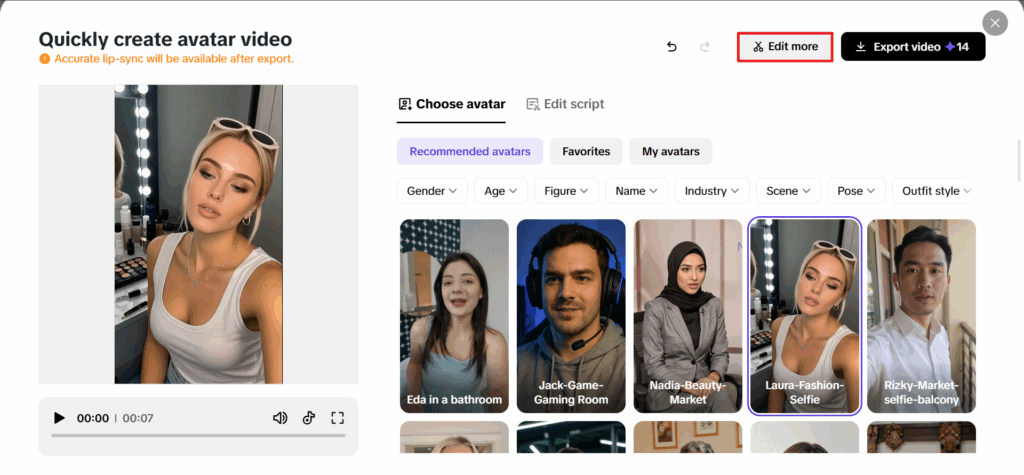

Step 3: Share your video

After applying lip sync, click Edit more to further fine-tune your video. You can use the video editor to edit the script, change voice timing, or adjust facial expressions for greater accuracy.

You can also include text overlays and background music to give the final product an extra sheen. Once you’re happy with the video, click Export to save it in your desired format.

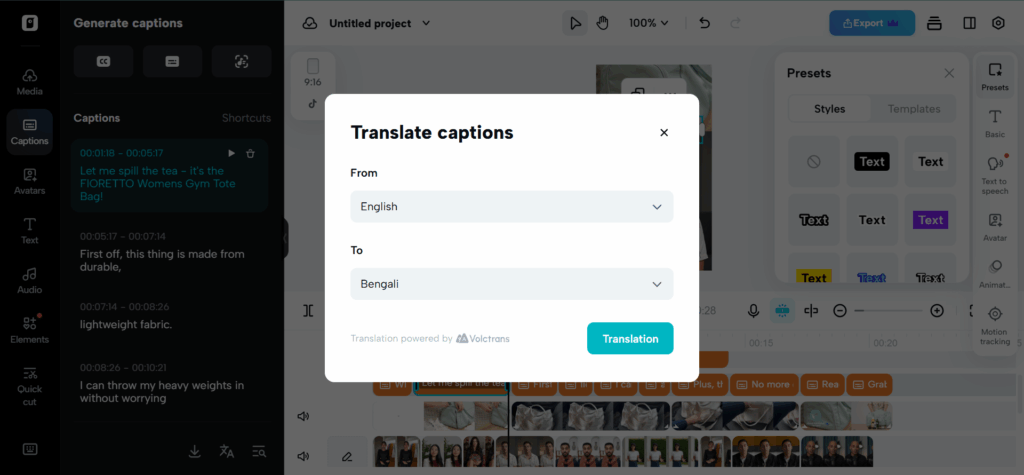

How translation complicates lip syncing

While syncing within one language is challenging, try syncing several. A translated script tends to alter pacing, syllable, and emphasis, so syncing requires additional finesse. Which is why having Pippit’s video translator as an ally is a strong asset.

By syncing translated captions with voices, producers can localize their videos within markets while maintaining immersion intact. But once more, pacing and expressions need to change as well — or else delivery is off even if the words are in order.

Turning mistakes into opportunities

Ironically, lip sync fails can also go viral — but typically not the kind of viral brands would wish. Consider cringe celebrity dubs or ill-timed campaign commercials that audiences share as memes rather than ads. But well-managed, even errors can turn into chances. A lighthearted behind-the-scenes shot of a before-and-after repair can make your brand more relatable and create laughs to accompany the finished product.

The distinction between a flop video and one that flows

The highest-quality lip sync videos don’t merely eschew errors — they produce flow. Audience members forget they’re observing an avatar or a virtual production and are completely in the story, song, or message. The immersion is sustained from beginning through end.

Flopped videos, however, draw attention to the mechanics of the sync rather than the content itself. Rather than considering your brand, the viewers consider the clunky lag or stiff delivery. The solution is easy: tools and techniques that focus on realism.

Conclusion: keep your sync smooth with Pippit

At the end of the day, lip sync videos rise or fall on immersion. Get timing, expressions, and pacing right, and your audience forgets about the tech — they simply enjoy the story you’re telling. Slip up, and even the slickest idea can sink.

With Pippit, you can forget those pitfalls altogether. Its avatars are powered by AI, high-end syncing, and user-friendly editing tools that ensure seamless avoidance of mistakes and consistent, entertaining content every single time.

So come on, if you’re prepared to transform your lip syncs from cringeworthy experiments into engaging performances, allow Pippit to sync — not sink

![How to Buy Domain with Crypto Payment: Top 5 Providers [current_date format='Y'] Buy Domain with Crypto](https://qloudhost.com/blog/wp-content/uploads/2024/05/Buy-Domain-with-Crypto-2-375x195.jpg)

![How to Fix the Blank Page Error in [current_date format='Y']: Detailed Guide How to Fix the Blank Page Error](https://qloudhost.com/blog/wp-content/uploads/2025/08/How-to-Fix-the-Blank-Page-Error-375x195.jpg)

Leave a Comment